Multi-Gestures as VR Shortcuts

This page describes my summary for all of our project milestones with my partner Yi Li for the 3DUI graduate class at Georgia Tech. It focuses on multi-gesture hand recognition, specifically in the virtual reality space. We explore the potential use cases with regards to 3D user interfaces, and we offer a proposal for developing new graphical interaction techniques based on gesture recognition. We utilize the Oculus Quests with the hand-tracking SDK, and we achieved a perfect 100 score for the graduate project!

- Client:Georgia Tech Graduate Project & Paper

- Website:Github Repo

- Paper Link:Graduate Paper

- Completed:May 1, 2020

Background

Virtual hand tracking has been a long studied concept in the human-computer interaction area for quite some time now. In the past decade, companies such as Oculus and HTC

have released commercial VR headsets that came with hand controllers that acted as basic hand trackers. The actual hand functionality of these controllers were initially

limited by only sensing your hand positioning in VR space and if the user was pressing a button or not. There was no tracking of individual fingers or complex gestures.

Advances in consumer VR technology have evolved into many more accurate forms of hand tracking. These include the Leap Motion tracker, which was an add-on IR camera

peripheral that was able to pick up complex hand movements. Valve Index’s VR controllers were released in May 2019 and are designed to enable natural interactions with

objects in VR games. Most recently in December 2019, Oculus Quest had become the first inside-out tracking VR system to enable an experimental controller-free hand tracking

mode using its built in headset cameras. An image of the headset camera system is shown below:

Intuitive interaction techniques are a major aspect of AR and VR applications. This means that a user should be able to manipulate virtual objects without the aware utilization of prior knowledge. However, the use of hand gestures and hand gesture recognition is still a challenging research field in the realm of AR & VR as many techniques have not left research laboratories. One main reason is the need for technical devices that are attached to the user’s hand in order to properly track it. However, with advances in controller-less hand tracking technology such as Leap Motion and Oculus Quest, we are one step closer to developing true intuitive interaction techniques. One particular area we want to explore is multi-gesture recognition for user interaction in 3D VR space.

Goal

As mentioned prior, our project is to explore whether or not multi-gestures could be a potential shortcut for user interfaces. We want to experiment on an example such as a 3D paint

program similar to Tilt Brush or APainter. In these programs, toggling an option on from a 2D menu floating in front of one controller can prove to be quite a tedious task,

especially when you need to position your other controller to act as a mouse pointer. Then, to turn the option off or go into a different tool mode (create, erase, etc.) you usually

have to redo the process again. If multi-hand gestures were a shortcut similar to keyboard shortcuts, such as CTRL-C and CTRL-V, we can foresee them being handy shortcuts for such 3D paint programs.

The environment we have developed on will be utilizing the Oculus Quests obtained from class. Due to the nature of the Oculus Hand Tracking SDK, we have setup a Unity 2019 3.8f1 project

written in C# that is built on the Android module since the Oculus Quest environment is a mobile platform. We have taken example scenes to recreate a hand tracking scene without the

need of controllers. At the time of this writing, Oculus Quest does not support development of both controllers and hands at the same time. This will result in Unity crashes if done so.

Process

We have concluded that pinching gestures would be the easiest to implement and differentiate from. Our decision was influenced by the fact that we mainly wanted to create interesting UI interactions,

not particularly creating new custom gestures from scratch. The pinching gestures could be generated by combining boolean values and defined as any individual finger touching the thumb. Thankfully,

the SDK provided us with enough functionality to distinguish between which individual finger was touching the thumb at any time. This effectively allowed us eight different pinch gestures, four for each hand.

We decided that the best way to enter and exit different states was with two handed gestures. Double pinching with specific fingers on both hands would be a very niche multi-gesture command. This would help

us reduce the amount of false positives of someone accidentally entering a state with only one handed gestures. We have decided for the purposes of usability that our double pinches would be the same pinching

gestures on each individual hand. Both index fingers, middle fingers, ring fingers or pinky fingers touching the thumb at the same time would be examples. By using the exact pinch gesture on both hands,

this would also help reduce confusion.

Our implementation includes four main pinch modes:

- Double index finger pinch: Rotate/Scale

- Double middle finger pinch: Create/Erase

- Double ring finger pinch: Copy/Paste

- Double pinky finger pinch: Color

Rotate/Scale Mode

In this mode you can interact with cubes placed in the scene to rotate or scale them. To do a rotate shortcut command, with either hand ray intersecting a cube, do an index finger

pinch and hold it while slowly rotating your hand. When you let go of the index finger pinch, the cube stops rotating. The rotation is linearly based on the position of the hand.

To do a scale up shortcut command, with either hand ray intersecting a cube, do a middle finger pinch and hold it to scale up. To do a scale down shortcut command, do a ring finger pinch and hold

it to scale down. When you let go of either finger pinches, the cube stops scaling respectively. The scale will be linearly based on how much you hold down the gestures.

It is important to note that you can perform and hold the pinching gesture with either hand. You can rotate or scale the cube object entirely using one hard, or alternatively,

use your left hand ray to select the cube, then rotate or scale with your right hand doing the gesture. We made the decision to give the user both options as they might be left-handed, right-handed or ambidextrous.

Create/Erase Mode

In this mode you are granted the ability to create cubes with your left hand and erase with your right hand. To do a create cube command, use your left hand to do an index finger pinch gesture.

A cube will be created in front of your hand direction. You can intersect cubes within each other.

To do an erase cube command, use your right hand to do a middle finger pinch gesture. If there is a cube intersecting your right hand ray, it will erase it.

We decided on giving two different functionalities in this mode to test the ease of both hands. We understand sometimes when we draw or create in these paint programs, we want to quickly erase if we’ve misplaced an object.

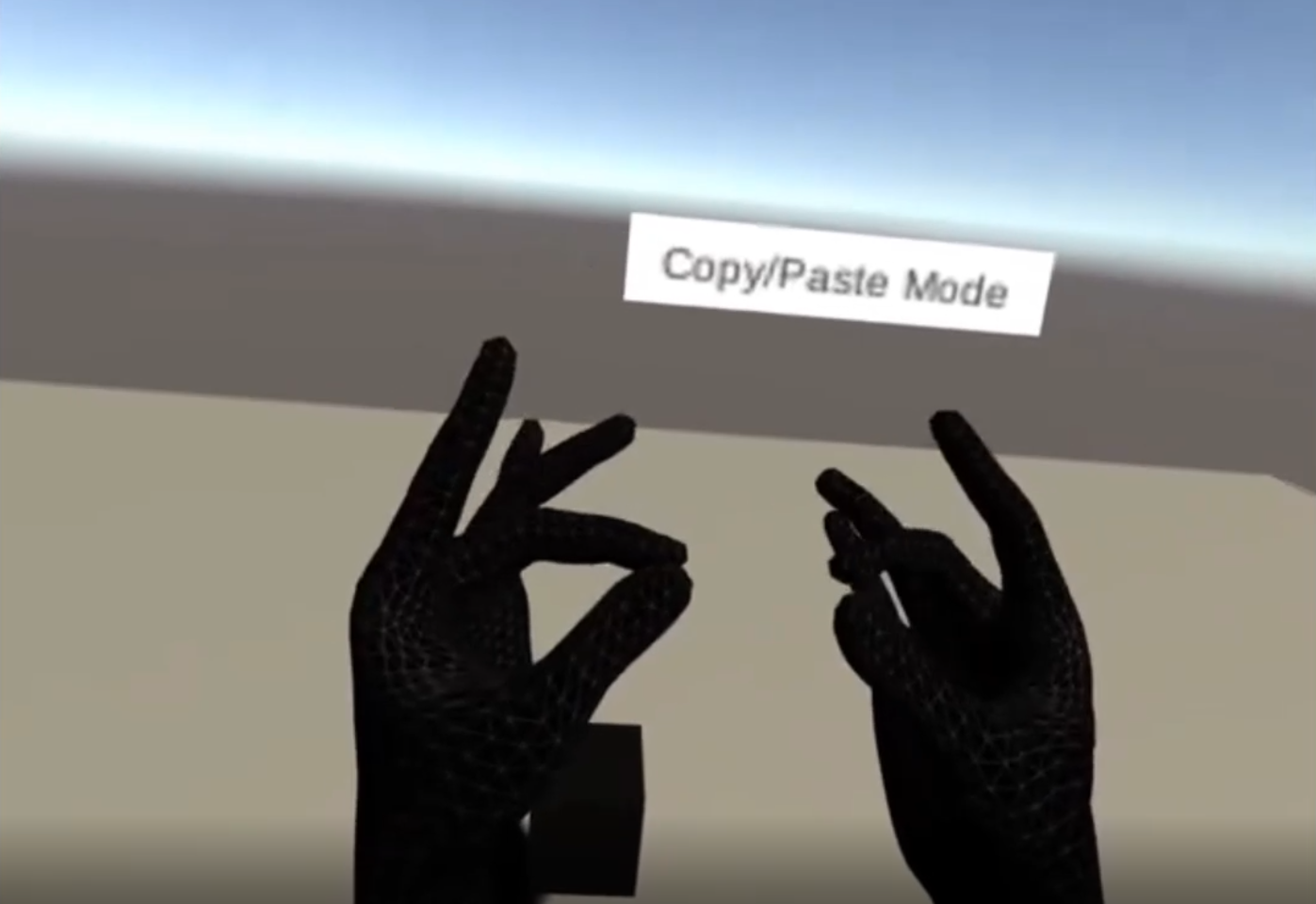

Copy/Paste Mode

In this mode you are granted the ability to copy an object that is intersecting with one hand ray, and paste the copied object with the opposite hand ray . To do a copy cube command,

simply use one of your hands rays to intersect a cube, that cube will be the one that is copied. To do a paste cube command, use your opposite hand to do an index finger pinch gesture.

This will paste the copied cube in front of the opposite hand. We tried to make this as accurate as we could with the calculations.

This mode was heavily inspired by the classic keyboard shortcut of copy and pasting. We recognized that current VR and AR paint programs did not support this functionality,

so we sought an opportunity to implement it as it is a common shortcut that people use. This mode will allow the user to quickly select objects to copy and paste using both hands.

Color Mode

The default mode you start off in is color mode. In this mode, you are granted the ability to change the color of a cube that is intersecting with either hand ray.

To do a color change command, do pinch gestures with different fingers on either hand. Each pinch gesture will correlate to a different color mapped below:

- Left index finger pinch: Red

- Left middle finger pinch: Green

- Left ring finger pinch: Blue

- Left pinky finger pinch: Yellow

- Right index finger pinch: Pink

- Right middle finger pinch: Teal

- Right ring finger pinch: Purple

- Right pinky finger pinch: Orange

We wanted to experiment with all eight different gestures in one mode and thought changing color was the most appropriate way to do so. This will allow the user to quickly change the color of the object with the eight main colors of the rainbow. This does not have the accuracy or diversity of a color wheel, but could be useful for applications with a limited range of color options or other similar toggles.

Results

You can see a combined demo shown above. This project was a meaningful experience for the both of us. With both of us possessing the background of game development and HCI, we are able to experiment with a

new area of 3D user interfaces with gesture recognition. It has allowed us to first hand tinker and gain deeper knowledge within the field of virtual reality.

Through the entire implementation process, we are able to understand the back-end architecture for AR and VR Unity applications and the process for the hand

tracking system in a mixed reality world. We hope that this paper has inspired people to continue exploring this exciting research field, and we cannot wait

to see how future systems will implement hand tracking with user interfaces.

If you want to read our research paper, click the picture below. Link is provided at the top as well as the Github repo!